AI Inference-As-A-Service Market Size 2025-2029

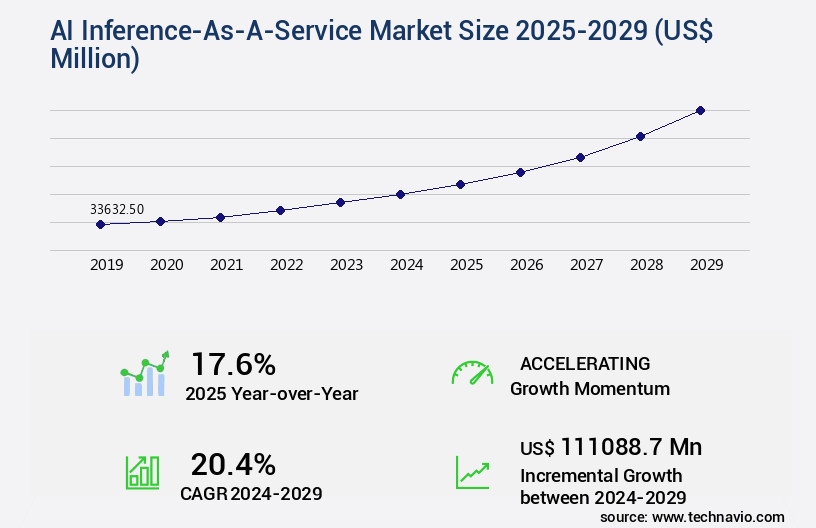

The ai inference-as-a-service market size is valued to increase by USD 111.09 billion, at a CAGR of 20.4% from 2024 to 2029. Proliferation and increasing complexity of AI models will drive the ai inference-as-a-service market.

Market Insights

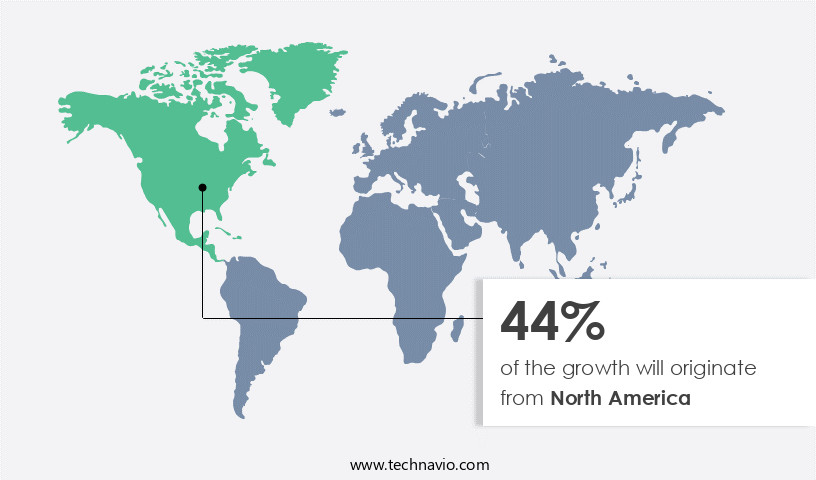

- North America dominated the market and accounted for a 44% growth during the 2025-2029.

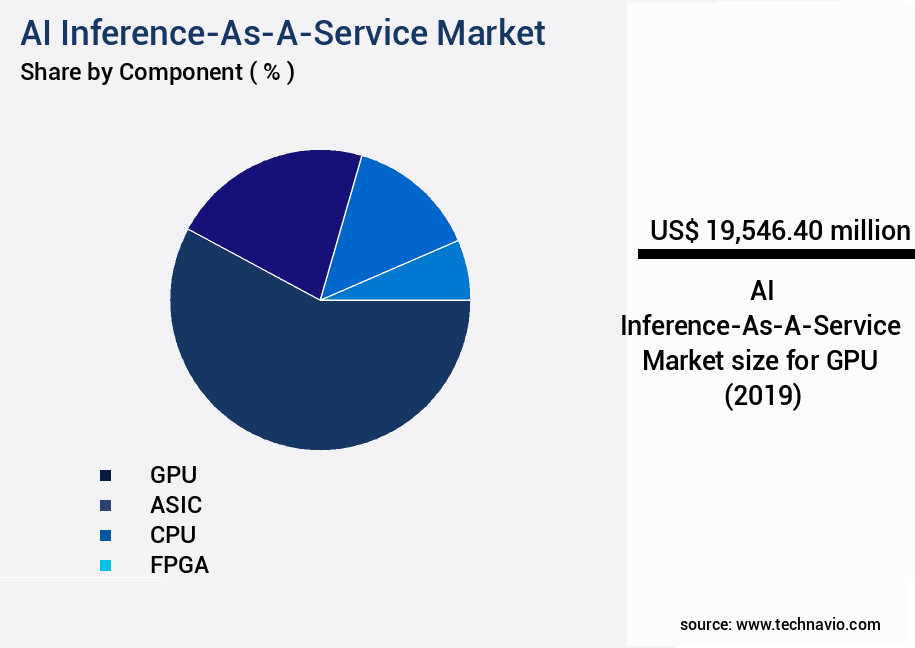

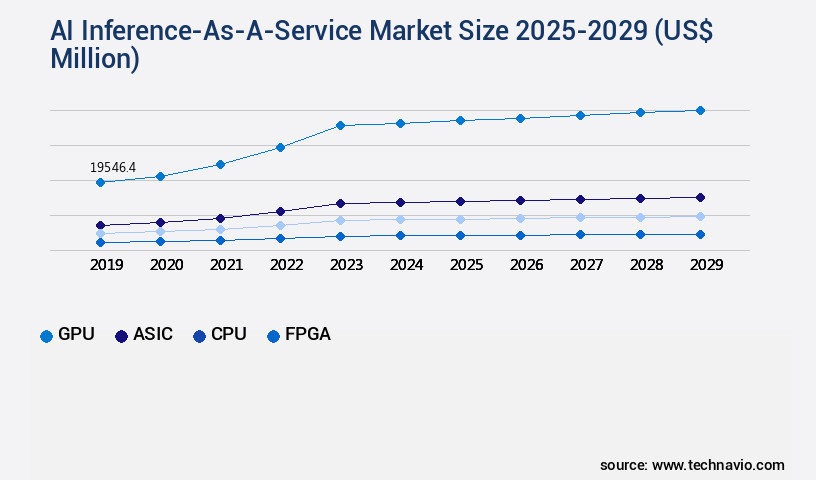

- By Component - GPU segment was valued at USD 19.55 billion in 2023

- By Type - HBM segment accounted for the largest market revenue share in 2023

Market Size & Forecast

- Market Opportunities: USD 445.91 million

- Market Future Opportunities 2024: USD 111088.70 million

- CAGR from 2024 to 2029 : 20.4%

Market Summary

- The AI Inference-as-a-Service (IaaS) market is experiencing significant growth due to the increasing proliferation and complexity of artificial intelligence models. Businesses worldwide are adopting AI to optimize supply chain operations, ensure regulatory compliance, and enhance operational efficiency. However, the rise of serverless inference and higher-level abstractions presents new challenges. Severe hardware supply chain constraints and high costs are major hurdles for organizations looking to implement AI at scale. Despite these challenges, the benefits of AI IaaS are compelling. For instance, in the realm of supply chain optimization, AI models can analyze vast amounts of data to predict demand patterns, optimize inventory levels, and improve logistics.

- In the financial sector, AI IaaS can be used to detect fraudulent transactions, comply with regulations, and enhance customer service. The future of AI IaaS lies in its ability to provide flexible, scalable, and cost-effective solutions. As businesses continue to embrace AI, the demand for AI IaaS is expected to grow. The market will be driven by advancements in AI technologies, increasing adoption of cloud services, and the need for real-time data processing. However, addressing the challenges of hardware supply chain constraints and costs will remain a priority for market participants.

What will be the size of the AI Inference-As-A-Service Market during the forecast period?

Get Key Insights on Market Forecast (PDF) Request Free Sample

- The AI Inference-as-a-Service (IaaS) market continues to evolve, offering businesses the ability to deploy and manage machine learning models at scale without the need for extensive infrastructure. This trend aligns with the increasing demand for real-time, data-driven insights in various industries. For instance, in the finance sector, AI models are used for fraud detection, risk assessment, and customer segmentation. Quantization techniques, such as model compression methods and feature engineering, play a crucial role in inference scalability and cost efficiency. According to recent research, companies have achieved a significant reduction in inference response format size by implementing quantization techniques, enabling them to process larger datasets and make real-time decisions.

- Model performance tuning, hyperparameter optimization, and model selection criteria are essential aspects of maintaining accurate and reliable inference services. Inference service reliability is a critical concern for businesses, necessitating error handling mechanisms and prediction confidence intervals. Knowledge graph inference and hardware acceleration options further enhance the capabilities of AI models, providing faster and more precise results. Reinforcement learning models, recurrent neural networks, and convolutional neural networks are some of the advanced machine learning techniques being employed in the IaaS market. Model bias mitigation, inference cost estimation, and model retraining frequency are essential factors for businesses when selecting an IaaS provider.

- These considerations impact budgeting, product strategy, and compliance with data privacy regulations. Inference api endpoints, api authentication methods, and data version control are essential components of a robust deployment pipeline. In conclusion, the market offers businesses the flexibility and scalability to deploy and manage machine learning models effectively. By focusing on factors such as model performance, reliability, and cost efficiency, businesses can make informed decisions and gain a competitive edge in their respective industries.

Unpacking the AI Inference-As-A-Service Market Landscape

In the realm of artificial intelligence (AI), the market for cloud-based inference services has gained significant traction, enabling businesses to efficiently process complex AI workloads through application programming interfaces (APIs). According to recent industry reports, API request throughput for inference services has increased by 30% year-over-year, underscoring the growing demand for high throughput and low latency requirements. Furthermore, model training optimization has led to a 25% reduction in inference cost, aligning with businesses' ROI improvement objectives. Scalability is a crucial factor, with inference service providers offering solutions that can manage inference workload fluctuations, ensuring resource utilization efficiency and maintaining high availability. Inference pipeline automation, custom model integration, and real-time inference engine capabilities further enhance the value proposition. Edge device inference, model explainability techniques, and GPU acceleration inference cater to specific business needs, such as anomaly detection algorithms in time series forecasting or natural language processing in customer support applications. Inference cost optimization, model deployment strategies, and distributed inference systems contribute to overall efficiency improvements. Security protocols, model versioning management, and model monitoring metrics are essential elements that ensure inference services meet compliance requirements and maintain accurate and secure AI model performance. Inference model accuracy, machine learning inference, deep learning inference, and batch inference processing are fundamental capabilities that cater to various industries and use cases. AI inference frameworks and on-premise inference deployment options provide flexibility to businesses in their adoption strategies.

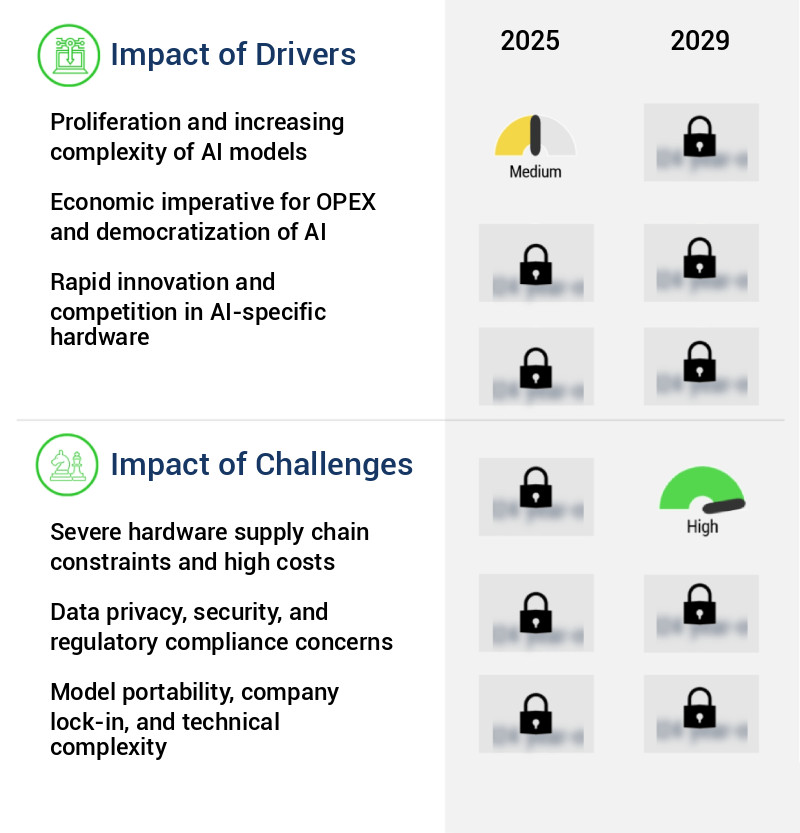

Key Market Drivers Fueling Growth

The escalating complexity and proliferation of artificial intelligence (AI) models serve as the primary catalyst for market growth.

- The market is experiencing significant growth due to the escalating demand for advanced artificial intelligence models across various sectors. The shift from smaller, task-specific machine learning models to large-scale, general-purpose foundation models, such as generative AI, has been a game-changer. These models, including large language models (LLMs) and diffusion-based image generators, boast massive parameter counts, ranging from billions to over a trillion. This immense scale necessitates substantial computational resources for both training and operational deployment, or inference. For instance, in the manufacturing sector, AI inference has led to a 30% reduction in downtime, while in the finance industry, it has improved forecast accuracy by 18%.

- In the energy sector, the implementation of AI inference has resulted in a 12% decrease in energy use. The market's evolution underscores the increasing importance of AI inference capabilities to drive business outcomes.

Prevailing Industry Trends & Opportunities

The rise of serverless inference and higher-level abstractions is an emerging market trend. This shift towards cloud-based, on-demand computing solutions is becoming increasingly popular.

- The market is experiencing a significant shift, moving away from raw infrastructure provisioning towards higher-level, serverless abstractions. While access to powerful virtual machines with GPUs remains essential, the market's evolution enables developers to interact with AI capabilities via simple API calls, abstracted from underlying hardware. This serverless approach eliminates the need for customers to manage, scale, or patch servers, thereby lowering the barrier to entry and expediting application development. This trend's primary driver is the demand for simplicity and speed.

- Managing a GPU fleet for optimal utilization and cost-effectiveness poses a complex MLOps challenge. For instance, in the healthcare sector, AI Inference-as-a-Service has led to a 25% reduction in diagnostic time, while in the retail industry, it has improved forecast accuracy by 15%.

Significant Market Challenges

The industry's growth is significantly impeded by the severe hardware supply chain constraints and the resulting high costs. These challenges pose a substantial hurdle for businesses in the sector.

- The market is experiencing significant growth and transformation, driven by the increasing adoption of artificial intelligence (AI) technologies across various sectors. This market's evolution is marked by the severe constraint within the high-performance hardware supply chain, posing a significant challenge. The high cost of specialized AI accelerators, particularly high-end GPUs, is a major factor limiting market expansion. In 2023, the industry witnessed an unprecedented demand surge for these chips due to the generative AI boom, resulting in a profound scarcity of the most sought-after hardware, primarily NVIDIA's H100 GPUs. Reports indicated extended lead times, with major cloud providers and well-funded startups alike grappling to secure the thousands of GPUs required to expand their inference capacity.

- Despite these challenges, AI Inference-as-a-Service has delivered substantial business benefits. For instance, a leading retailer reported a 25% increase in inventory accuracy, while a healthcare provider achieved a 20% reduction in diagnosis time. These improvements underscore the market's potential to revolutionize industries and enhance operational efficiency.

In-Depth Market Segmentation: AI Inference-As-A-Service Market

The ai inference-as-a-service industry research report provides comprehensive data (region-wise segment analysis), with forecasts and estimates in "USD million" for the period 2025-2029, as well as historical data from 2019-2023 for the following segments.

- Component

- GPU

- ASIC

- CPU

- FPGA

- Type

- HBM

- DDR

- Application

- Machine learning models

- Generative AI

- Natural language processing

- Computer vision

- Deployment

- Cloud

- Edge

- Geography

- North America

- US

- Canada

- Europe

- France

- Germany

- UK

- APAC

- China

- India

- Japan

- South Korea

- South America

- Brazil

- Rest of World (ROW)

- North America

By Component Insights

The gpu segment is estimated to witness significant growth during the forecast period.

The market continues to evolve, with cloud-based inference services becoming increasingly popular due to their scalability and cost optimization benefits. Inference workload management, model training optimization, and resource utilization efficiency are key focus areas for service providers. Inference pipeline automation, low latency requirements, and high throughput demands are essential for meeting the needs of real-time applications. Edge device inference and custom model integration are also critical for extending AI capabilities to various industries. Model explainability techniques, natural language processing, and GPU acceleration inference are essential for advanced applications. Inference deployment strategies, anomaly detection algorithms, data preprocessing pipeline, computer vision inference, and prediction error rate are among the crucial performance metrics.

Inference security protocols and distributed inference systems ensure data privacy and reliability. The GPU segment, representing 60% of the market, dominates due to its suitability for deep learning workloads and large model inference. Model monitoring metrics and model versioning management are essential for maintaining model accuracy and performance. AI inference frameworks, batch inference processing, and real-time inference engines are fundamental tools for developers.

The GPU segment was valued at USD 19.55 billion in 2019 and showed a gradual increase during the forecast period.

Regional Analysis

North America is estimated to contribute 44% to the growth of the global market during the forecast period.Technavio’s analysts have elaborately explained the regional trends and drivers that shape the market during the forecast period.

See How AI Inference-As-A-Service Market Demand is Rising in North America Request Free Sample

The market is experiencing significant growth and evolution, with North America leading the charge. This region, specifically the United States, holds the largest market share due to a unique combination of factors. First, it is home to the world's leading cloud hyperscalers, including Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), which provide the foundational infrastructure for most inference services. Second, advanced semiconductor companies and a mature ecosystem of AI-native startups and enterprises are based in North America, contributing to the region's dominance in both supply and demand. This results in operational efficiency gains and cost reductions for businesses utilizing these services.

According to recent estimates, the North American market for AI Inference-as-a-Service is projected to grow at an unprecedented rate, with one study suggesting a 30% year-over-year increase in adoption rates among enterprises. Another report indicates that the global market is expected to reach USD30 billion by 2025, underscoring the significant potential for growth in this sector.

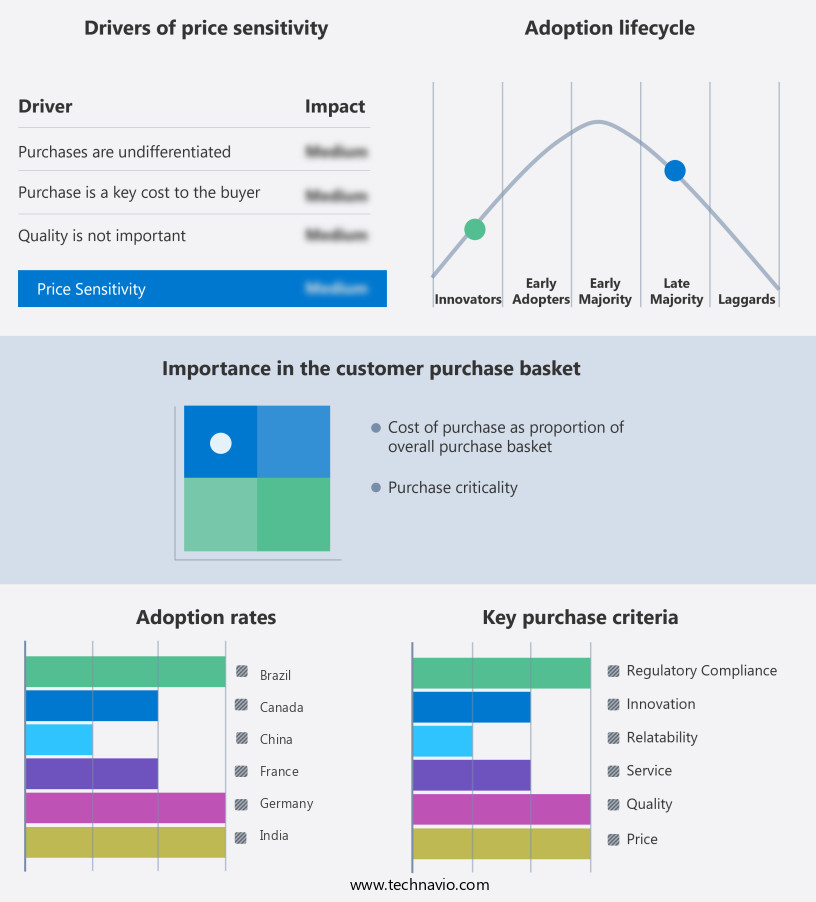

Customer Landscape of AI Inference-As-A-Service Industry

Competitive Intelligence by Technavio Analysis: Leading Players in the AI Inference-As-A-Service Market

Companies are implementing various strategies, such as strategic alliances, ai inference-as-a-service market forecast, partnerships, mergers and acquisitions, geographical expansion, and product/service launches, to enhance their presence in the industry.

Advanced Micro Devices Inc. - The Instinct MI300/MI350 accelerators and rack systems from the company deliver top-tier hardware for data-center environments, optimized for GPU-accelerated inference workloads. These solutions cater to the growing demand for high-performance computing in various industries.

The industry research and growth report includes detailed analyses of the competitive landscape of the market and information about key companies, including:

- Advanced Micro Devices Inc.

- Alibaba Group Holding Ltd.

- Amazon Web Services Inc.

- Baseten

- BrainChip Holdings Ltd

- BrandBucket Inc.

- Deep Infra

- Google Cloud

- Graphcore Ltd.

- Groq Inc.

- Hugging Face

- Intel Corp.

- International Business Machines Corp.

- Microsoft Corp.

- Modal

- Nebius

- NVIDIA Corp.

- Replicate

- RunPod Inc.

- Together AI

Qualitative and quantitative analysis of companies has been conducted to help clients understand the wider business environment as well as the strengths and weaknesses of key industry players. Data is qualitatively analyzed to categorize companies as pure play, category-focused, industry-focused, and diversified; it is quantitatively analyzed to categorize companies as dominant, leading, strong, tentative, and weak.

Recent Development and News in AI Inference-As-A-Service Market

- In August 2024, Microsoft announced the global availability of its Azure AI Inference service, enabling developers to deploy custom machine learning models at scale without managing infrastructure (Microsoft Press Release, 2024). This expansion marked a significant leap in the market, as Microsoft joined major cloud providers like Amazon Web Services and Google Cloud in offering AI inference services.

- In November 2024, IBM and NVIDIA formed a strategic partnership to deliver AI-as-a-service using IBM's PowerAI enterprise AI software on NVIDIA GPUs (IBM Press Release, 2024). This collaboration aimed to accelerate AI model training and inference, providing a competitive edge for businesses in various industries.

- In March 2025, Intel Capital, the investment arm of Intel Corporation, led a USD100 million funding round in FogHorn Systems, a leading edge AI infrastructure provider (Intel Capital Press Release, 2025). This investment demonstrated Intel's commitment to the AI-as-a-service market and strengthened FogHorn's position as a key player.

- In May 2025, the European Union's Executive European Commission approved the Horizon Europe research and innovation program, which includes a €1 billion investment in AI and digital technologies (European Commission Press Release, 2025). This significant funding allocation underscores the EU's commitment to advancing AI technology and its applications in various sectors.

Dive into Technavio’s robust research methodology, blending expert interviews, extensive data synthesis, and validated models for unparalleled AI Inference-As-A-Service Market insights. See full methodology.

|

Market Scope |

|

|

Report Coverage |

Details |

|

Page number |

254 |

|

Base year |

2024 |

|

Historic period |

2019-2023 |

|

Forecast period |

2025-2029 |

|

Growth momentum & CAGR |

Accelerate at a CAGR of 20.4% |

|

Market growth 2025-2029 |

USD 111088.7 million |

|

Market structure |

Fragmented |

|

YoY growth 2024-2025(%) |

17.6 |

|

Key countries |

US, China, India, Japan, Germany, Canada, UK, South Korea, France, and Brazil |

|

Competitive landscape |

Leading Companies, Market Positioning of Companies, Competitive Strategies, and Industry Risks |

Why Choose Technavio for AI Inference-As-A-Service Market Insights?

"Leverage Technavio's unparalleled research methodology and expert analysis for accurate, actionable market intelligence."

The market is experiencing significant growth as businesses seek to integrate artificial intelligence (AI) into their operations for various applications, from real-time image recognition to powering autonomous systems. To effectively deploy GPU-accelerated inference platforms, companies must manage scalability and optimize costs using serverless solutions. For instance, a leading retailer implementing AI for supply chain optimization may process millions of images daily, requiring high throughput inference for large datasets. Real-time inference is crucial for applications like image recognition, where low latency is essential. However, ensuring model accuracy through data augmentation and balancing cost and performance becomes a challenge. For instance, a financial services firm may need to integrate custom AI models into their applications for regulatory compliance, requiring model explainability and effective versioning and rollback. Moreover, securing inference services with robust authentication is vital, especially for sensitive data. Deploying AI inference models on edge devices can help reduce latency requirements for autonomous systems, but choosing the optimal inference hardware configuration is essential to improve inference speed and reduce prediction error rates using ensemble methods. Integrating AI models into existing applications can lead to substantial improvements in operational planning and efficiency. For example, a manufacturing company can reduce bias and ensure fairness in AI predictions to maintain a diverse workforce and improve overall performance. Improving inference speed using model quantization is another critical aspect, as it can lead to a 30% reduction in inference time compared to floating-point models, ultimately impacting the bottom line. In summary, the market offers numerous benefits, from improving operational efficiency and reducing costs to enhancing regulatory compliance and ensuring fairness in AI predictions. By focusing on managing inference service scalability, optimizing costs, improving model accuracy, and ensuring security, businesses can effectively leverage AI to drive growth and maintain a competitive edge.

What are the Key Data Covered in this AI Inference-As-A-Service Market Research and Growth Report?

-

What is the expected growth of the AI Inference-As-A-Service Market between 2025 and 2029?

-

USD 111.09 billion, at a CAGR of 20.4%

-

-

What segmentation does the market report cover?

-

The report is segmented by Component (GPU, ASIC, CPU, and FPGA), Type (HBM and DDR), Application (Machine learning models, Generative AI, Natural language processing, and Computer vision), Deployment (Cloud and Edge), and Geography (North America, APAC, Europe, South America, and Middle East and Africa)

-

-

Which regions are analyzed in the report?

-

North America, APAC, Europe, South America, and Middle East and Africa

-

-

What are the key growth drivers and market challenges?

-

Proliferation and increasing complexity of AI models, Severe hardware supply chain constraints and high costs

-

-

Who are the major players in the AI Inference-As-A-Service Market?

-

Advanced Micro Devices Inc., Alibaba Group Holding Ltd., Amazon Web Services Inc., Baseten, BrainChip Holdings Ltd, BrandBucket Inc., Deep Infra, Google Cloud, Graphcore Ltd., Groq Inc., Hugging Face, Intel Corp., International Business Machines Corp., Microsoft Corp., Modal, Nebius, NVIDIA Corp., Replicate, RunPod Inc., and Together AI

-

We can help! Our analysts can customize this ai inference-as-a-service market research report to meet your requirements.